On April 26th, 2024, the Department of Homeland Security released a 28-page document outlining AI security guidelines for critical infrastructure owners.

While it is a step in the right direction, the whitepaper is vague, dry, and unhelpful.

In this article, I will summarize the paper to save you from reading it, give my thoughts on how it could be improved, and discuss how we can guard critical infrastructure from AI threats in the future.

Contents

DHS Whitepaper - Summary

AI Risks to Critical Infrastructure

Guidelines For Critical Infrastructure Owners

The Disconnect…

How Could It Be Improved?

How Can We REALLY Guard Our Critical Infrastructure?

Final Thoughts - The Future

DHS Whitepaper - Summary

Skim through this section if risk isn’t your thing…

On October 30, 2023, Joe Biden signed Executive Order 14110 - Safe, Secure, and Trustworthy Development and Use of Artifical Intelligence. This order mandated the Secretary of Homeland Security to assess the risks of AI in critical infrastructure, using the NIST AI Risk Management Framework.

AI Risks to Critical Infrastructure

The Cybersecurity and Infrastructure Security Agency (CISA) considered three categories of AI system-level risk when looking at critical infrastructure:

Attacks Using AI - Leveraging AI to facilitate harm, e.g. AI-enabled social engineering

Attacks Targeting AI Systems - Targeted attacks on AI systems supporting critical infrastructure, e.g. manipulating AI algorithms

Failures in AI Design and Implementation - Poorly designed models causing critical infrastructure to fail

Guidelines For Critical Infrastructure Owners

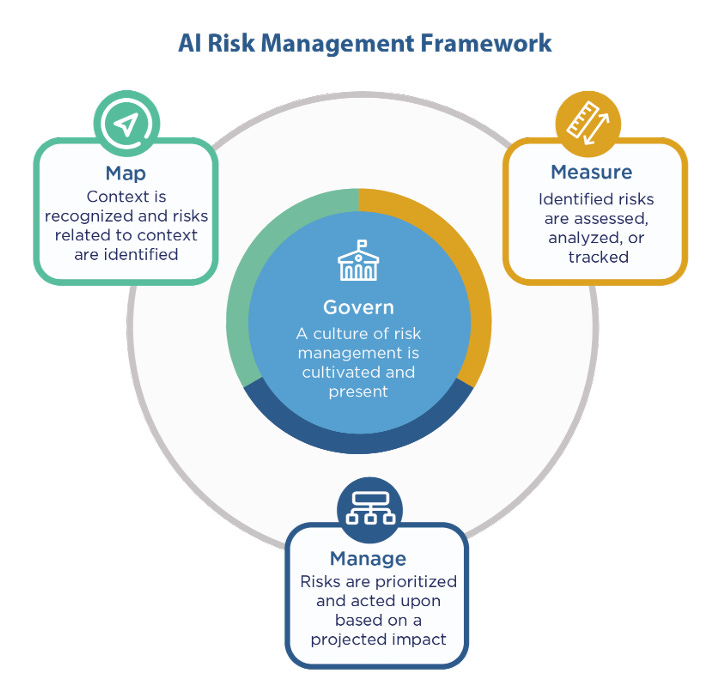

Infrastructure owners and operators are advised to apply the NIST AI Risk Management Framework, tailored to critical infrastructure:

Govern

Establish an organizational culture of AI risk management:

Establish transparency in AI system use

Detail plans for cyber risk management

Establish roles and responsibilities with AI vendors

Map

Understand your individual AI use context and risk profile:

Establish which AI systems in critical infrastructure operations should be subject to human supervision

Review AI vendor supply chains for security and safety risks

Gain and maintain awareness of new failure states

Measure

Develop systems to assess, analyze, and track AI risks:

Continuously test AI systems for errors or vulnerabilities

Build AI systems with resiliency in mind

Evaluate AI vendors and AI vendor systems

Manage

Prioritize and act upon AI risks to safety and security:

Monitor AI systems’ inputs and outputs

Prioritize identified AI safety and security risks

Implement new or strengthened mitigation strategies

The Disconnect…

Are you still awake?

I found this whitepaper difficult to read. The piece lacks specific action steps, and several “recommendations” seem incredibly generic.

Let me give you an example:

Apply mitigations prior to deployment of an AI vendor’s systems to manage identified safety and security risks and to address existing vulnerabilities, where possible.

“Apply mitigations”. What mitigations can we apply? As of writing, every major Large Language Model is vulnerable to prompt injection, leading to devastating attack chains if the model can also read public internet data.

While the whitepaper uses big words and sophisticated phrases, the advice seems very disconnected from the attacks we are seeing today (Input manipulation, training data poisoning, model inversion as identified by OWASP). A lack of awareness around specific attack vectors means the appropriate defenses may not be applied by policy owners.

How Could It Be Improved?

Security guidelines for infrastructure owners are notorious for focussing on risk management frameworks as opposed to real attacks. This is compounded by the fact that AI is so new - many exploits are not properly documented yet.

Below are some tips as to how this could be revised:

Make the document more concise(!) - there are too many similar points that don’t provide value

Make the document more targeted - it should consider specific features of critical infrastructure, such as their frequent reliance on legacy systems

Consult a greater number of technical leaders in the AI space - the whitepaper was clearly made by government risk officials. This works for other industries, but in a rapidly changing space like AI Security, technical experts should be consulted about the latest attacks.

How Can We REALLY Guard Our Critical Infrastructure?

It is too soon to integrate AI into critical infrastructure. Large language models have showcased the sheer number of security issues inherent to the AI models of today. Putting a volatile new technology into systems society depends on is a recipe for disaster.

The uncomfortable reality is that policymakers must implement regulations that severely restrict AI in high-risk industries such as critical infrastructure. These regulations need to be coauthored by technical experts in the AI Security field.

Final Thoughts - The Future

In summary, the DHS document shows society’s lack of preparedness for AI attacks. Over the next 5 years, I predict we will see several nation-state attacks on AI solutions embedded into critical infrastructure, leading to devastating impact. When this happens, perhaps the Department of Homeland Security will take AI Security more seriously.

If this article interests you, check out my piece on indirect prompt injection below. Thanks for reading.

Indirect Prompt Injection - The Biggest Challenge Facing AI

Since ChatGPT was released in November 2022, big tech has been racing to integrate LLM technology into everything. Music, YouTube videos, and hotel bookings are just a few examples. But as of writing, any LLM which can read data from external sources is inherently insecure. In this article, we will take a deep dive into indirect prompt injection attacks,…