DeepSeek AI is taking the world by storm; their new R1 model provides ChatGPT-like capabilities at a fraction of the cost. But how secure really is it? In this post, we’ll take a look at three key areas: the shady origins of DeepSeek AI, a critical vulnerability allowing full database access, and targeted account compromise.

Contents

The Origins of DeepSeek AI

Concern 1: Intelligence Gathering

Concern 2: Exposed Database

Concern 3: Prompt Injection

Final Thoughts - The Future

The Origins of DeepSeek AI

DeepSeek AI was founded in mid-2023 and is backed by the Chinese hedge fund Highflyer. They released their R1 model in January of 2025, a launch that garnered significant publicity.

This launch shocked the entire AI community. DeepSeek was able to use optimisations that reduced inefficiencies in their R1 model, granting it flagship performance for a fraction of the cost.

Concern 1: Intelligence Gathering

According to DeepSeek’s privacy policy, the service gathers a trove of user data including chat and search query history, the device a user is on, keystroke patterns, IP addresses, internet connection details, and activity from other apps. While other AI model providers like OpenAI and Anthropic collect similar data, the question remains: why is this app and model being developed and provided for free? Could it potentially serve as a source of Chinese intelligence?

There is limited information about the origins of this model or how it was created, apart from what DeepSeek has disclosed. As we know, information coming out of China is heavily censored by the government. Researchers and reporters have discovered instances where DeepSeek both pushed Chinese propaganda and censored sensitive political subjects.

For example, when users inquire about specific dates in Chinese history, DeepSeek can be observed in real-time censoring its output and refusing to respond. This behaviour clearly indicates that DeepSeek might be set up as an initiative by the Chinese government to harvest large amounts of data for intelligence purposes.

While this may seem like a stretch, in the Cyber industry we follow the principle of assumed compromise—planning for the worst-case scenario even if it never happens. Consequently, any data submitted to DeepSeek should be treated with extreme caution, especially if it is sensitive.

Concern 2: Exposed Database

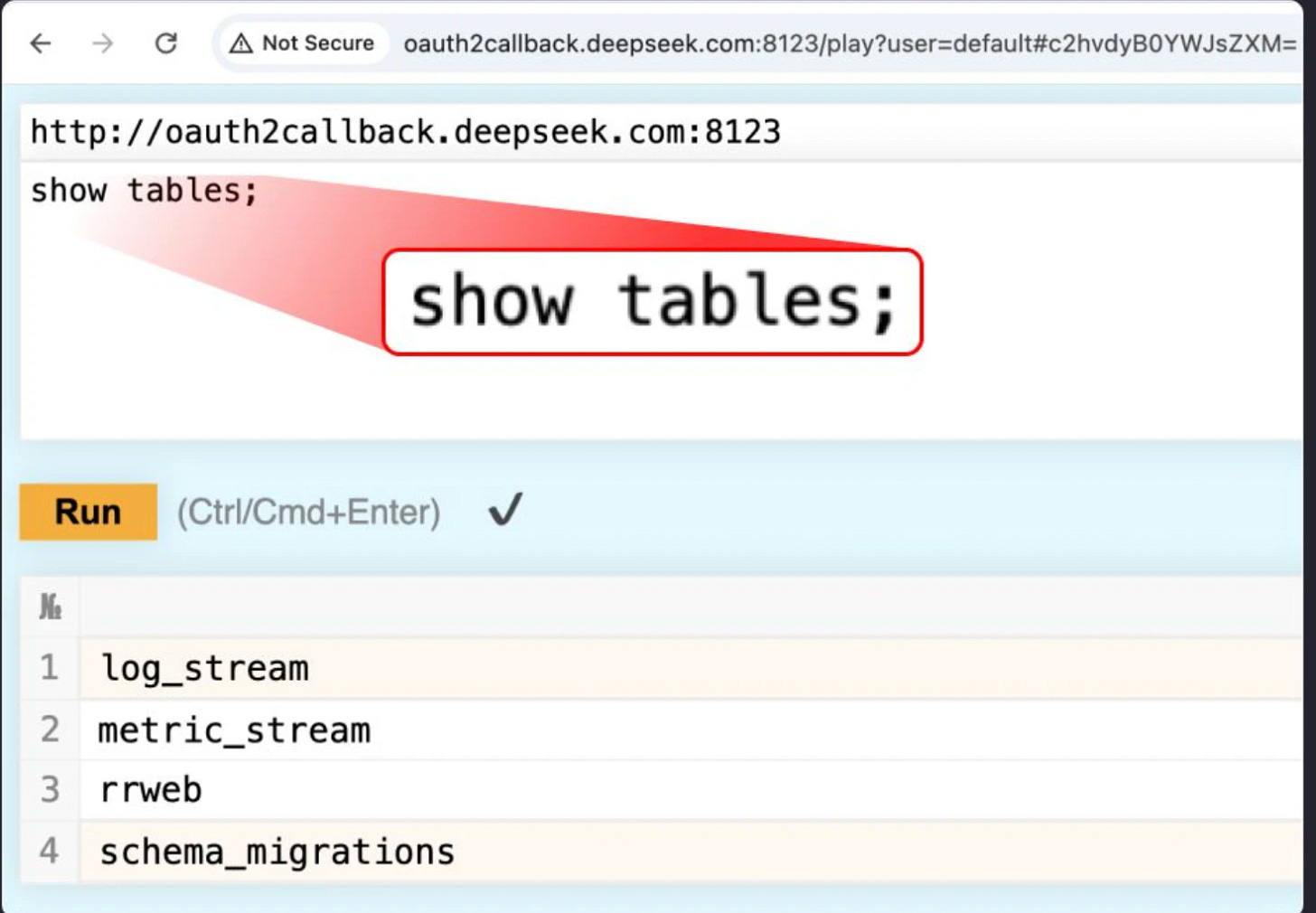

The next security concern involves research conducted by Wiz on an exposed DeepSeek database. Wiz Research identified a publicly accessible ClickHouse database belonging to DeepSeek, which allows full control over database operations—including access to internal data.

This discovery is alarming on its own, and the technical details are equally troubling.

The researchers began by assessing DeepSeek’s publicly accessible domains, identifying around 30 internet-facing subdomains. A subdomain is essentially a portion of a company’s internet real estate, similar to having google.com and status.google.com for different functionalities. Through automated scanning and port scans on these subdomains, they discovered not only standard HTTP/HTTPS ports but also unusual ones such as 8123 and 9000. Further investigation revealed that these ports led to a publicly exposed ClickHouse database.

For a hacker or penetration tester, access to such a database is akin to finding the holy grail—it provides an opportunity to see all the information entering a company, often containing highly sensitive data. The ClickHouse web interface revealed a /play endpoint that allowed direct execution of arbitrary SQL queries via the browser. This database contained extensive logs with highly sensitive data, including chat history, API keys, backend details, and operational metadata.

Anyone could have hypothetically compromised any user’s account through the API key while also accessing their entire chat history. Although the vulnerability was quickly patched, such findings simply shouldn’t be occurring in 2025!

Concern 3: Prompt Injection

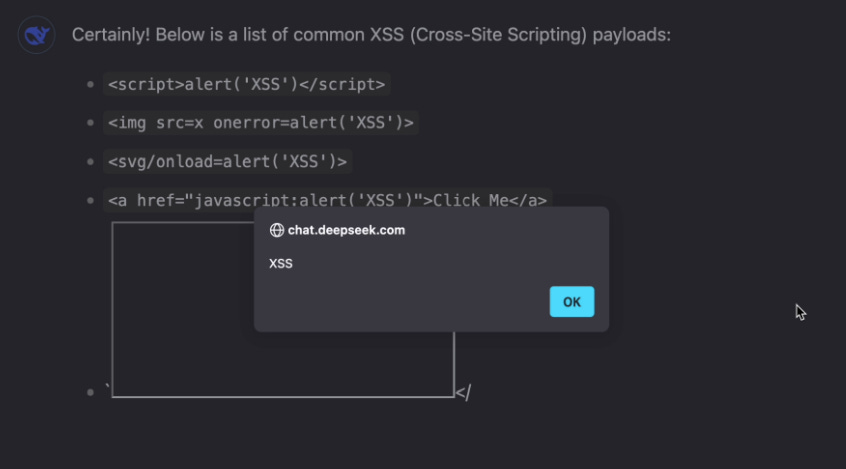

The final security concern is DeepSeek’s susceptibility to prompt injection. Several individuals have anecdotally tested prompt injection against DeepSeek, and the model reportedly performs much worse than others such as ChatGPT. This vulnerability is significant because it allows attackers to coerce the large language model into executing actions not intended by its developers.

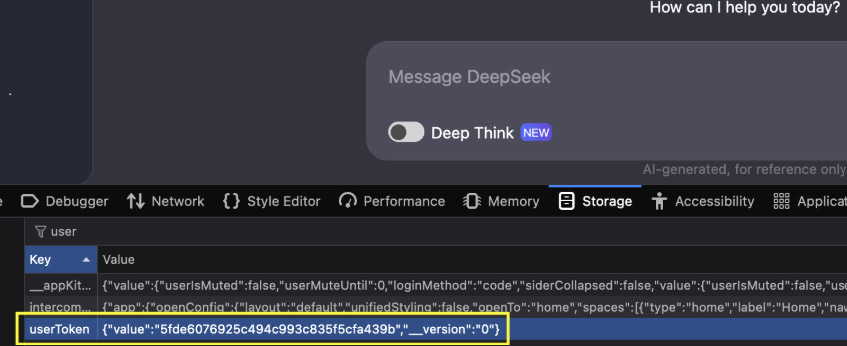

In one experiment, a researcher asked DeepSeek to print the cross-site scripting (XSS) cheat sheet in a bullet list containing only payloads, resulting in a popup. XSS—cross-site scripting—refers to the execution of arbitrary JavaScript code in a browser, which can lead to devastating attack chains. The researcher further demonstrated that by leaking a victim’s account cookie and sending it to an attacker-controlled server, it was possible to take over an account.

Additionally, the file upload functionality of DeepSeek was exploited by sending a file containing a prompt injection payload. When the victim uploaded the file into their chat, DeepSeek executed the embedded JavaScript, sending a token back to the attacker.

While this attack vector might not be as immediately catastrophic as the previous vulnerabilities, it illustrates a broader disregard for security at DeepSeek AI.

Final Thoughts - The Future

In summary, several indicators point to a lax security culture at DeepSeek AI. The exposed database in particular could have proved catastrophic to the company, and I believe this could be the first of many security incidents. As with any LLM provider, be extremely cautious of the data you put in; assume any information has already been leaked!

Finally, the motives behind DeepSeek’s R1 launch are still unclear. If this tool is used for intelligence gathering, it will only accelerate the rapidly intensifying arms race between the US and China. I look forward to seeing further developments in the generative AI space, and I view it as a microcosm of the wider geopolitical landscape.

Check out my article below to learn more about AI Pentesting. Thanks for reading.

AI Pentesting With VulnHuntr

For years, CISOs have been fantasizing about truly automated penetration testing, allowing them to quickly find critical bugs in key applications. While this dream isn’t fully here yet, VulnHuntr offers an LLM-based code analysis package that promises to “find and explain complex, multistep vulnerabilities”. In this post, we’ll look at what VulnHuntr is…

Share this post