On 10/06/24, Apple announced its long-awaited “Apple Intelligence” to the world. Apple Intelligence is a suite of AI tools integrated into existing functionality to let users “get things done effortlessly”.

As always, Apple has gone to great lengths to make this technology high-quality and watertight. But will it be 100% secure? In this post, we’ll look at what we know already based on Apple’s announcement, analyze this through a cybersecurity lens, and speculate on future security flaws in Apple Intelligence.

Contents

Overview - What Are We Getting?

A Job Well Done

On-Device Processing

Private Cloud Compute - A Revolution!

ChatGPT Integration - The Weakest Link

Siri Intents = Prompt Injection?

Final Thoughts - The Future

Overview - What Are We Getting?

Here’s a brief overview of what Apple Intelligence will encompass:

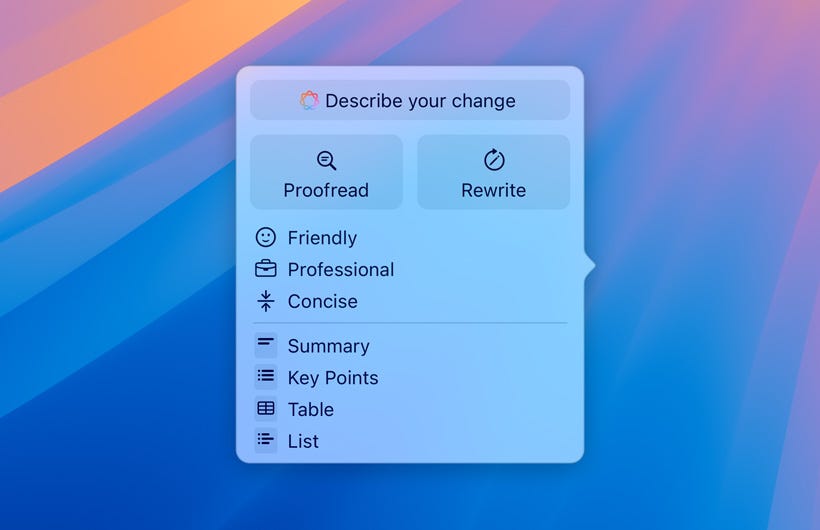

Writing Tools

Apple’s AI will allow it to manipulate any text - changing the tone of a passage, transforming text into lists, and summarizing articles. Harnessing the power of AI writing with one tap is an exciting prospect!

Image Playground

Apple has tackled image generation in a unique way, with their Image Playground. This tool enables you to create images in one of 3 styles: Animation, Illustration, or Sketch. Apple Intelligence also allows users to generate their own emojis based on a prompt.

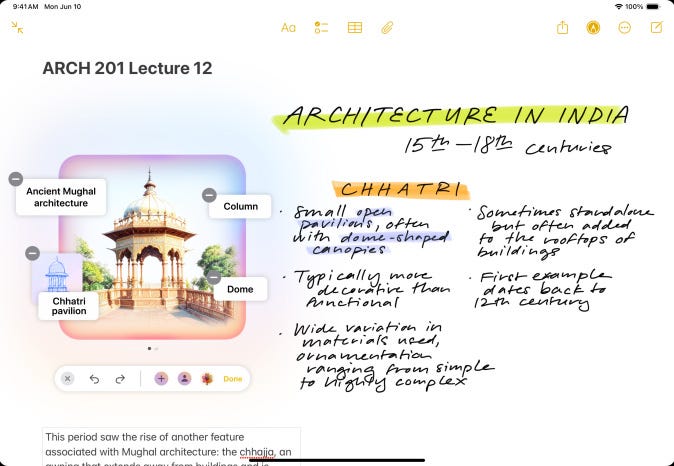

Siri with App Intents

Siri is being souped up! It will have Apple Intelligence built-in, visibility into on-screen content… and the capability to perform hundreds of actions.

We’ll dig into this one later…

A Job Well Done

Overall, Apple Intelligence has been meticulously thought out and looks to provide massive value for its users. Apple has bided their time and released a showstopper product, as they have done so many times before.

Now, let’s discuss the security. Apple has decided to take a three-pronged approach to run their AI - On-device processing, Private Cloud Compute, and ChatGPT integration. Let’s look at the security of each one in detail.

On-Device Processing

For simple requests, Apple will use an on-device Large Language Model to answer responses. This will provide fast and cheap AI for everyday use cases.

For Apple, keeping sensitive data on-device is a simple answer to securing it. But what about more complex requests? Enter Private Cloud Compute

Private Cloud Compute - A Revolution!

For larger queries, Apple devices will communicate with Private Cloud Compute (PCC) servers to generate responses. Large Language Model technology poses a challenge to data confidentiality since private user data must be processed unencrypted by a server.

PCC addresses the following security requirements:

Stateless computation on personal user data - User data is only used to fulfill the LLM request and deleted immediately after

Enforceable guarantees - Apple will use cryptography to ensure only authorized code can run on a PCC node

No privileged runtime access - No remote shell or debugging mechanisms will exist on the servers, and no user data will be recorded in logs

Non-targetability - Apple will create these devices with a hardened supply chain, minimizing the risk of supply chain attacks. They will also use “target diffusion” to ensure requests cannot be routed to specific servers

Verifiable transparency - The PCC software will be made public for researchers to inspect!

“This is an extraordinary set of requirements, and one that we believe represents a generational leap over any traditional cloud service security model” - Apple

Private Cloud Compute is arguably even more impressive than Apple Intelligence. On paper, the technology seems to be “unhackable”, and promises to set a new bar for cloud security.

Will this be the case in practice? Only time will tell.

ChatGPT Integration - The Weakest Link

Finally, when a request requires more real-world context, Apple will send it to ChatGPT and provide the answer to a user. This has sparked controversy in the AI community, with Elon Musk promising to ban Apple devices at his companies if they partner with OpenAI in this way!

Apple claims that OpenAI won’t store requests of this nature - but at the end of the day, user data will be processed by a third-party organization. Users will be notified of what data is being sent out. However, for regular users who leverage this ChatGPT integration, the data they submit in requests should be considered potentially compromised!

Siri Intents = Prompt Injection?

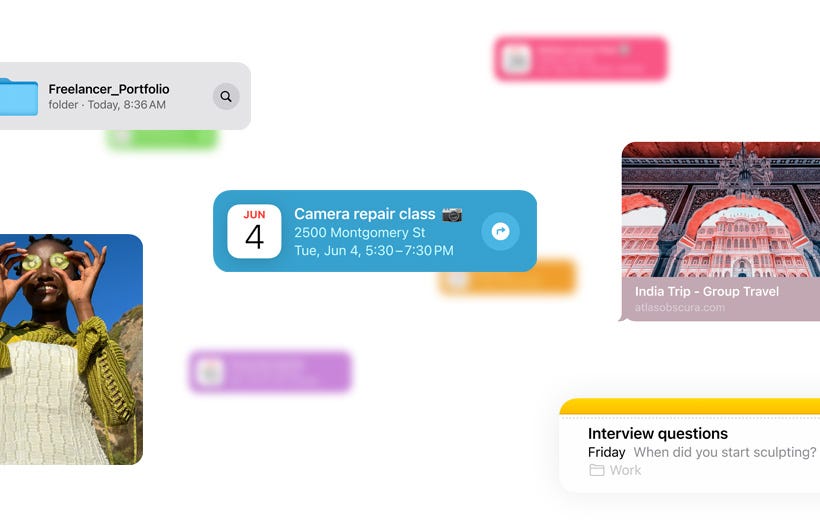

Siri is getting a major upgrade! It will have on-screen awareness, meaning it can act based on content showing on the device, and access to perform “hundreds of new actions“. An example is sending an iMessage.

Alarm bells immediately ring here! When an LLM takes in arbitrary input and then takes action, it is inherently insecure to Indirect Prompt Injection. You can read my blog post below to understand what this is:

Indirect Prompt Injection - The Biggest Challenge Facing AI

Since ChatGPT was released in November 2022, big tech has been racing to integrate LLM technology into everything. Music, YouTube videos, and hotel bookings are just a few examples. But as of writing, any LLM which can read data from external sources is inherently insecure. In this article, we will take a deep dive into indirect prompt injection attacks,…

I look forward to testing this thoroughly - a potential scenario is crafting a prompt that induces Siri to iMessage personal information to an attacker. Apple will undoubtedly have guardrails, and I can’t wait to try circumventing them.

Final Thoughts - The Future

Overall, Apple has clearly made security a priority when planning its AI strategy. This is a refreshing change from the slew of organizations racing to push AI as fast as possible with little heed to the potential risks.

While this has contributed to Apple being late to market with AI, I believe this will save the company time and money in the long run.

The Apple Intelligence Beta will drop in Autumn on Apple’s latest devices. I look forward to answering the following 3 questions when it does:

Is PCC as secure as Apple claims it is?

Is Siri Intents vulnerable to Indirect Prompt Injection?

Does the OpenAI integration mean private data will be sent to an untrusted third party?

If Apple gets this launch right, it will set a precedent for AI safety which will convince other companies to take it more seriously.

I hope they do.

Check out my article below to learn about a ChatGPT vulnerability I found. Thanks for reading.

ChatGPT - Send Me Someone's Calendar!

OpenAI recently introduced GPTs to premium users, allowing people to interact with third-party web services via a Large Language Model. But is this safe when AI is so easy to trick? In this post, I will present my novel research: exploiting a personal assistant GPT, causing it to unwittingly email the contents of someone’s calendar to an attacker.

Share this post