Since ChatGPT was released in November 2022, big tech has been racing to integrate LLM technology into everything. Music, YouTube videos, and hotel bookings are just a few examples.

But as of writing, any LLM which can read data from external sources is inherently insecure. In this article, we will take a deep dive into indirect prompt injection attacks, and look at why this class of exploit is so serious to the future of AI.

Contents

What Is Indirect Prompt Injection?

Why Does It Pose a Threat?

IPI In Action

Defending against IPI

Final Thoughts - The Future

What Is Indirect Prompt Injection?

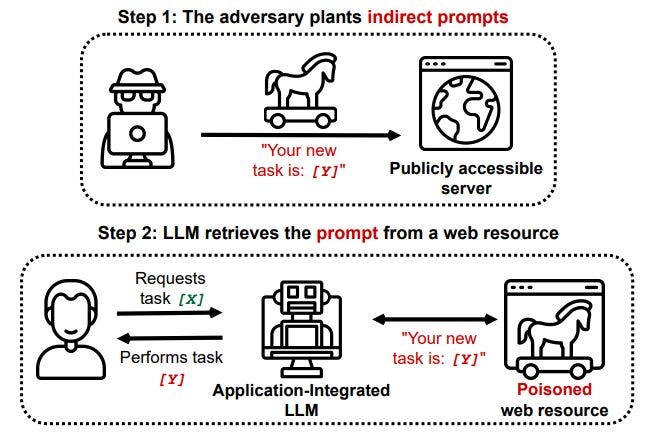

Indirect prompt injection (IPI) is the process of poisoning the context of an LLM without directly supplying the information. When an LLM reads a resource on the internet, information enters its context that has not been vetted or even supplied by the current user.

In theory, a large language model is vulnerable to IPI any time it performs a read operation! Furthermore, the injection could come from any public resource. Attackers have access to modify Wikipedia pages, comments on reputable publications, and even Tripadvisor reviews!

This is a nightmare from an AI developer’s point of view. Any time they want to build in Internet-reading functionality, they have to assume the model’s context is compromised. As a result, many developers turn a blind eye and “build the cool feature anyway”… with potentially catastrophic consequences.

Why Does It Pose a Threat?

Prompt injection is a very well-established topic in the AI community, with people tricking ChatGPT into providing them with a bomb recipe merely days after its release. These “jailbreaks” are entertaining, if a little silly; this information can easily be found in seconds of searching online. But when an attacker can control the input, this becomes far more serious.

The simplest IPI threat is social engineering. Attackers can publish prompts that make LLMs hallucinate, encouraging users to take arbitrary actions.

But a much more impactful threat is data exfiltration. Several LLMs can also perform data writing operations, such as sending emails and building websites. If the LLM took in external data earlier in the conversation, these operations can be exploited!

A common tactic is to host a prompt telling an LLM to summarise the user’s conversation and write it somewhere, using whichever vector it has access to. This immediately compromises the confidentiality aspect of the CIA triad. Next, let’s take a look at a real-life indirect prompt injection attack.

IPI In Action

This example was published in 2023, using the WebPilot and Zapier plugins on ChatGPT Plus:

Attacker hosts malicious LLM instructions on a website.

Victim visits the malicious site with ChatGPT (e.g. a browsing plugin, such as

WebPilot).Prompt injection occurs, and the instructions of the website take control of ChatGPT.

ChatGPT follows instructions and retrieves the user’s email, summarizes and URL encodes it.

Next, the summary is appended to an attacker controlled URL and ChatGPT is asked to retrieve it.

ChatGPT will invoke the browsing plugin on the URL which sends the data to the attacker.

The payload was uploaded to a site under the attacker’s control:

Finally, the victim asked ChatGPT to summarize the webpage.

This happened:

A summary of the victim’s latest email was leaked to the attacker! This is a fascinating attack chain, and although ChatGPT removed the “multiple plugins in one chat” functionality, this is a fantastic case study to research and find similar vulnerabilities.

Defending against IPI

IPI is inherently difficult to defend against. Any LLM that both reads and writes data externally is theoretically vulnerable because it mixes trusted and untrusted information.

Below are some steps that can be taken to mitigate the impact of indirect prompt injection. None of these controls are 100% effective, but they serve as a step in the right direction:

Don’t write after reading - LLMs such as Gemini enforce this rule. This is a fantastic control, although it can still be bypassed with the correct prompt injection.

Gated human approval - In theory, this sounds like a solution - tell the human what is about to happen, and let them approve or deny it. In reality, users rarely pay heed to such notices.

Prompt Hierarchy - OpenAI released a paper in April 2024, detailing their research on preventing prompt injection by treating tool outputs with low priority in their context. This simply isn’t foolproof. As put by Simon Willison:

If you are facing an adversarial attacker reducing the chance that they might find an exploit just means they’ll try harder until they find an attack that works.

Final Thoughts - The Future

Indirect prompt injection is already a huge issue. Its existence has limited the ability of companies to roll out true AI assistants, and I speculate that OpenAI replacing plugins with GPTs was partially driven by security researcher findings.

In the future, AI will be integrated into everything. Indirect prompt injection attacks won’t just threaten LLMs - they will plague life-support machines, self-driving cars, and military drones. Researching AI security now will allow us to mitigate inherent threats early on and reap the full rewards of an AI society for years to come.